26 Tested Email Marketing Best Practices That Drive Results

Four words, thirty letters, implement them, and skyrocket your business like a pro: email marketing best practices!

Today, we’re going to dive into the best email practices to help you grow your traffic and sales using your mailing list.

If you’re looking for the perfect all-in-one tool to manage your email list and deliver high-converting campaigns, join Moosend for free.

The easiest and most affordable email marketing and newsletter software!

Ready to take your email marketing to the next level?

Email Marketing Best Practices

Here’s a TL;DR version of the tips and email marketing best practices covered in this guide.

- Experiment and deliver your emails at the right time

- Establish the appropriate email frequency

- Give subscribers compelling reasons to click

- Plan ahead for special dates

- Use the curiosity gap in your subject lines

- Create an email drip campaign (onboarding)

- A/B test your email campaigns

- Write engaging email copy

- Use high-quality visuals

- Optimize the preview text

- Have clear CTAs

- Use storytelling to build trust

- Build your own list

- Segment your email list

- Use double opt-in

- Keep your email list clean

- Allow your audience to unsubscribe easily

- Personalize your email campaigns

- Automate your messages

- Avoid ‘no-reply’ sender address and increase legitimacy

- Make your emails time-sensitive

- Remind subscribers why they signed up

- Make a promise from Day 1 (and keep it)

- Optimize your landing pages

- Reward loyal email subscribers

- Integrate email marketing with social media

1. Experiment and deliver your emails at the right time

What’s the best day and time to send your messages to get the best email open rates?

Internet search results indicate Tuesday at 10 am as the answer. Despite being written on some of the best marketing blogs, this piece of advice has been circulating the web for years now, and naturally, inboxes get bombarded on that day and time. So it has the opposite effect.

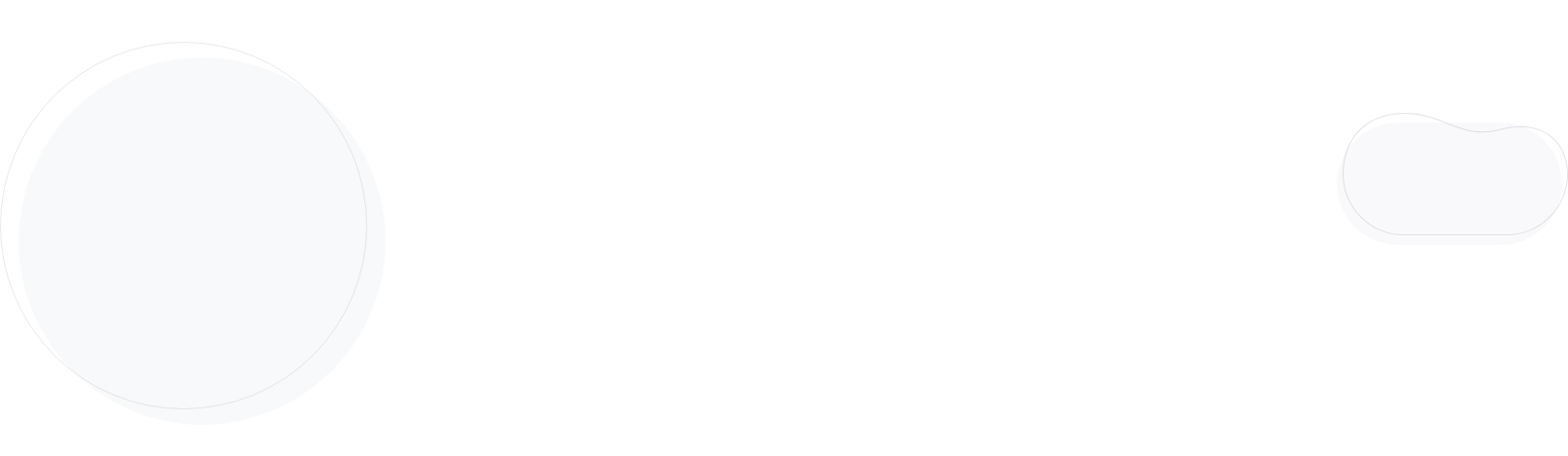

Our analysis of over 10 billion emails sent through our platform showed that the best day and time to send your emails is Thursday between 8 and 9 am.

However, this doesn’t necessarily mean this will be right for YOUR email list. When it comes to sending emails, you need to know how your target audience behaves.

The easiest way to do that is to collect data through email tracking and experiment until you find that sweet spot.

2. Establish the appropriate email frequency

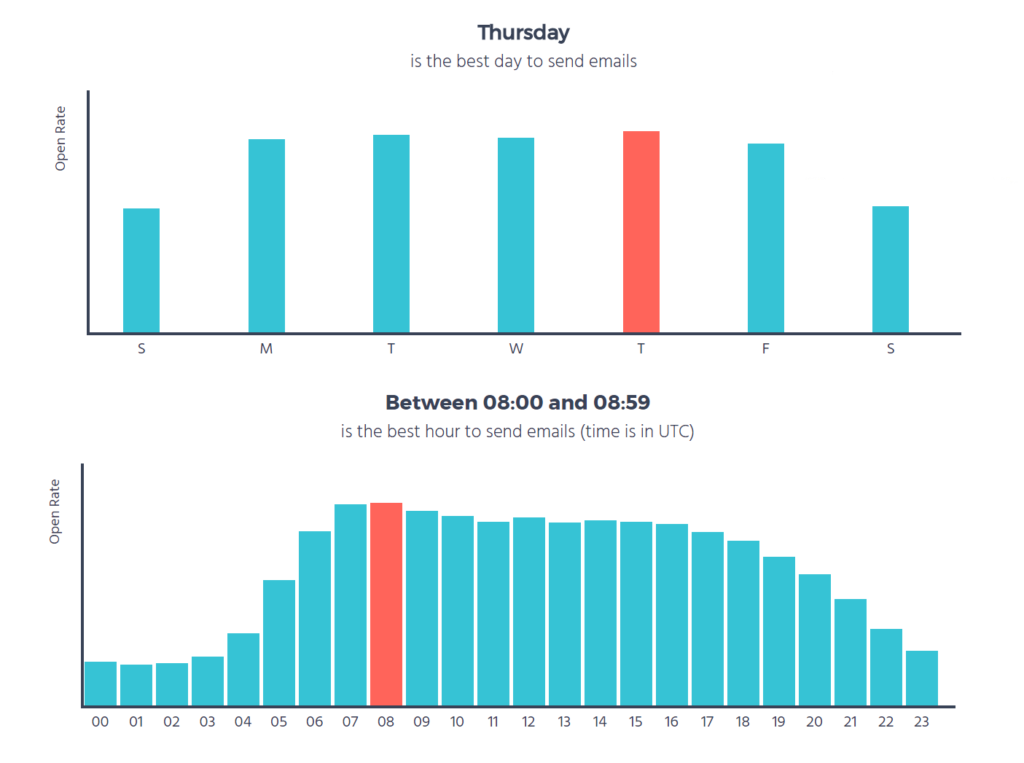

According to our data, the optimum frequency (to get your emails opened) is 2-5 campaigns per month.

Following this email marketing best practice, you can minimize the chance of your campaigns ending up in your subscribers’ trash folder. This, in turn, promotes your email deliverability.

Building up hype for your next campaign will make your audience appreciate you more, and look forward to receiving your next big email campaign.

Think of it like this. An opt-in subscriber has given you a golden ticket: they’ve trusted you with their email address. So don’t abuse their inbox or resort to tactics such as email blasts.

With Moosend establishing your frequency becomes effortless since the platform allows scheduling as well as automatic resending of your email campaign to the subscribers who didn’t open it.

Sign up for a free Moosend account now and see for yourself!

3. Give subscribers compelling reasons to click

Want to get better click-through rates? Then give your subscribers incentives to open and click through your email.

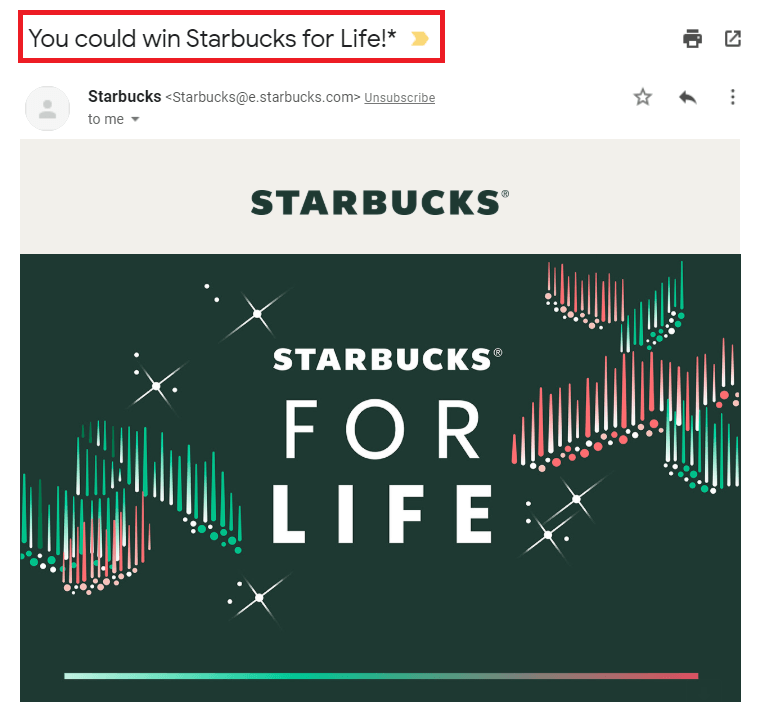

Consider using a strong incentive in your subject line, such as free shipping or buy an item and get one free, etc. Here’s a winning example from Starbucks.

That’s a great subject line right there: “You could win Starbucks for life!”. The email instantly catches people’s attention and stands out in the inbox. People are bombarded with promotional emails, so you have to make yours “the one.”

4. Plan ahead for special days

Specific dates on the calendar, like Christmas, Thanksgiving, and Easter are excellent marketing opportunities for every company. And email is your best marketing channel to do it!

Here’s what you can do for Halloween for example:

Plan ahead of time and craft special offers instead of relying solely on last-minute campaigns. Even if that means delivering a V-day email drip campaign on 22nd January!

Create your seasonal email templates early to save time, beat your competitors, and capitalize on those important dates.

Moosend offers a wide variety of stunning newsletter templates that you can customize to your taste. If you still haven’t got yourself an account, what are you waiting for?

5. Use the curiosity gap in your subject lines

Have you heard of the curiosity gap before? In short, you provide enough information to hook your audience and make them curious. But you don’t provide enough information to satisfy that curiosity until they click.

In recent times, the practice of writing enticing headlines, which appeal to our desire to ‘fill in the gaps,’ has been adopted by viral sites like Upworthy and Buzzfeed.

If you give away everything in your subject line, then a recipient has no particular reason to open your email. But holding back some information while providing enough to draw them in can lead to a serious boost in your open rate.

Let’s make an example. Here are two possible email subject lines:

- How We Used The Curiosity Gap To Boost Our Open Rate By 8%

- This Simple Copywriting Trick Boosted Our Email Open Rate By 8%.

In the first subject line, we give away the fact that we’re talking about the curiosity gap. However, in the second, we simply allude to a ‘trick.’

And the reader will have to open the email (or click through the website) to find out what that trick is. Which email subject line do you think would get more opens? Spoiler: the second one.

So, use the power of the curiosity gap (in a non-spammy way) to give a serious boost to your open rate.

6. Create an email drip campaign (onboarding)

Marketing automation is a powerful tool in the hands of modern email marketers. If you want to automate time-consuming tasks while nurturing your subscribers, you need a smart drip campaign to convince them to act. All this, without being spammy.

Drip campaigns are a series of emails usually sent to your new subscribers after registering through your newsletter signup forms. Luckily, we have tons of fantastic drip campaign examples to get you inspired.

The most popular series is a welcome email drip campaign. You can launch a sequence of emails with different incentives to onboard new customers.

The first email in the sequence can contain a discount for the first order. With the next one, you could incentivize them to join your loyalty program. Finally, the third email can introduce your team, aka the people behind the brand. In this way, you promote your brand image and humanize your brand.

7. A/B test your email campaigns

Crafting effective email campaigns is of the utmost importance and the only way to achieve that is through A/B testing!

Running an A/B test is a relatively easy process and it helps you improve every part of an email campaign. In short, what you do is send a different variation of the same campaign to two different groups of recipients.

Depending on your goals, you can test:

- subject lines

- preheader text (keep in mind your mobile device users)

- content of your email: images and copy

- CTA boxes (colors, copy, placement)

- your email campaign tone and length

Whatever you choose to test, don’t forget to check your metrics. Your open rates are essential if you’re checking variations of your subject lines. Similarly, monitoring your click rates when you come up with different email content is crucial.

Finally, the winning combo is the one that will give you the best conversion rate.

As one of the best Mailchimp alternatives out there, Moosend offers effortless A/B split campaign testing.

Pro Tip: Never forget to send test emails to yourself to check if your control and variations look good. An incomplete campaign sent by mistake will ruin your experiment, and you’ll have to start again.

8. Write engaging email copy

One of the email marketing best practices that shouldn’t be overlooked is writing effective email copy. First, you’ll have to establish your brand voice. This will allow your campaigns to be consistent across all channels not only email.

Your email copy needs to be valuable and precise while having actionable wording in order to inspire action and blend beautifully with your CTAs. You don’t want to tire subscribers with walls of text. The main goal is to emphasize the value of clicking through the email and lead people towards the CTA.

A/B testing of the email copy is a must in order to find out what resonates better with your target audience.

9. Use high-quality visuals

As they say, “a picture is worth a thousand words”, so your emails need to incorporate stunning and eye-pleasing visuals. It goes without saying that your visuals must be high-quality and aligned with your brand (in terms of colors, style).

The main aim of visuals is to “reinforce” your email copy and create an emotional connection with your subscribers. GIFs are also an option to be considered, however, be extra cautious as they tend to impact the tone of your email and they aren’t always relevant.

Finally, be sure to include ALT text in your images since it not only helps people with visual impairments but can also be life-saving in case the HTML does not render. Relevant text will be in the place of the blocked images and this might encourage subscribers to enable them and engage with the email as originally intended.

10. Optimize the preview text

Getting the most out of your email’s preview text can work wonders. The preview text is essentially a snippet of copy from the body of your email that is typically displayed next to the subject line. According to a report, using a preheader can provide an almost 22% boost in both open and click rates.

So, instead of leaving your campaign’s effectiveness to Lady Luck, it’s wise to craft an enticing preview text that accompanies your subject line and builds upon it. In this way, you make your email more appealing to open and hopefully catch the readers’ attention right away.

With Moosend, this small but impactful optimization is straightforward, and you can find this in your campaign settings.

11. Have clear CTAs

To have a successful email marketing campaign, you need to persuade subscribers to take action. Give them too many choices, and they may take the wrong action, or worse, get confused and unsubscribe from your list.

Therefore, it’s critical that your subscribers know what you want them to do next! To do that, you need to seamlessly blend your beautifully designed CTA with your email design. Make it ridiculously clear what you want your users to do.

CTA design and placement play an equal role. Make sure your CTAs are visible as well as accessible, to cater to people using mobile devices. Moreover, it’s advisable to have your main call-to-action above the fold, as otherwise, the majority of your recipients won’t see it.

12. Use storytelling to build trust

People inherently love stories. They bypass the logical part of our brains and form deep connections through emotion. It’s been found that people are 22x more likely to remember something if it’s weaved into a story.

Successful brands use stories in marketing to establish a strong bond with their customers. This, in turn, results in increased loyalty, better engagement rates, and most importantly, trust.

Stories can be personal, funny, and highly relatable to your target audience, thus helping to humanize a brand. So, storytelling is a powerful element that can be utilized in content marketing, able to engage and convert customers successfully!

If you’ve got a story that fits your email marketing strategy, make sure to share it.

13. Build your own list

It doesn’t come as a surprise that purchasing email lists is NOT an email marketing best practice you should follow, especially now after the establishment of the GDPR rules.

Healthy open rates play a vital role in email marketing campaigns, so if you’re attempting to contact people who haven’t given their consent, your campaigns’ performance will soon plummet. Moreover, your list runs the risk of being denied by major ISPs (Yahoo, Gmail, AOL, etc).

So, you need to build your own email list organically! Since building a large list can’t happen overnight, be sure to use lead magnets (ebooks, industry reports, freebies, etc) to incentivize people to join your list. Building your own list ensures high engagement rates and increased deliverability.

If you happen to have “inherited” an email list, it might be good to verify that you have each individual’s consent.

All in all, it’s worth putting in the work. The benefits of building a list far outweigh the risks of buying one. We have an excellent guide on how to build an email list from scratch. Check it out here!

14. Segment your email list

Different customers have different needs. In order to reach them in the best way possible and deliver messaging that resonates with their needs and preferences, you have to create customer segments.

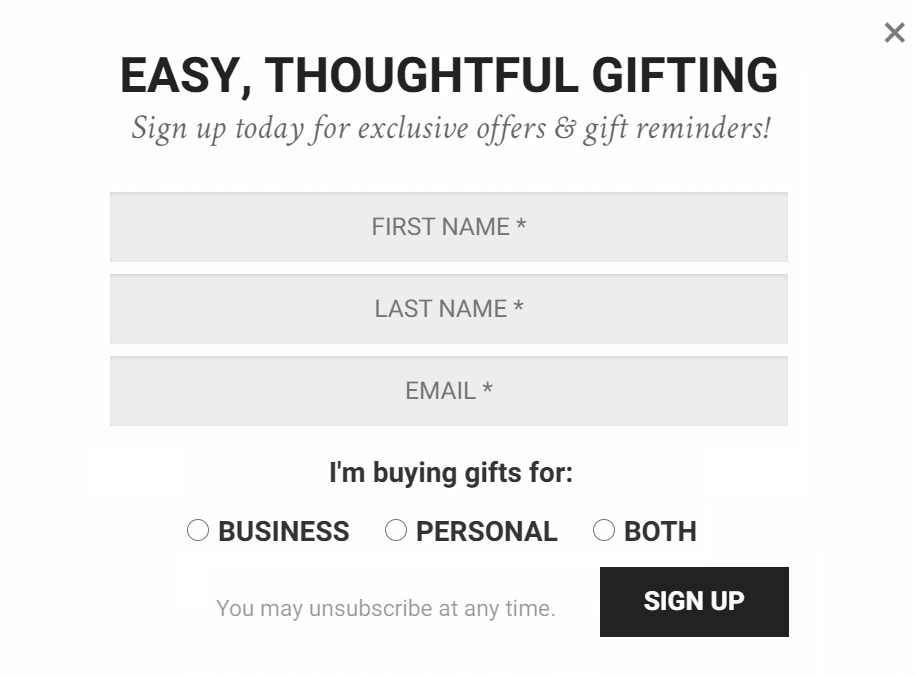

Segmenting your subscribers isn’t supposed to be a hassle. In fact, segmentation starts the moment you acquire your subscribers’ email addresses through your carefully crafted newsletter signup forms.

Generally, you can segment your audience based on their gender, location, lifestyle, on-site behavior, etc. In the above example, Baskits groups new subscribers based on their purchase preferences.

Proper segmentation of your list will allow you to tailor your messages to your customers’ specific needs and personalize them effectively. But that’s not all.

Advanced segmentation of your list can be vital in designing follow-up email campaigns. Let’s say you are launching a webinar. Some of your subscribers might miss that first email. By segmenting your mailing list, you can send a registration email to new subscribers, while delivering a reminder to those who have.

For more customer segmentation examples, check out our detailed guide.

15. Use double opt-in

When a new subscriber signs up for your emails, you can send them a link to confirm the subscription.

This process is your good old double opt-in, an extra step to verify your subscriber’s email address.

While the double opt-in adds an extra step to your subscribers’ journey, it’s one of the safest email marketing best practices you need. Quality over quantity!

If you’re still worried about your list potentially getting smaller, remember that using double opt-in will positively affect email deliverability.

16. Keep your email list clean

It’s sure good for the ego to look at the raw number of email subscribers. But if most of those subscribers never engage, they are, at best, chewing up resources or in some cases even hurting your overall deliverability.

Too many bounces and invalid email addresses can see your email marketing campaigns hit spam thresholds set by inbox providers. So, it is essential to clean your list regularly.

First of all, you need to remove invalid emails (hard bounces). A hard bounce means that the email address doesn’t exist on the server. Removing any hard bounces from your email list improves your sender reputation. If you use Moosend for your email marketing campaigns, you can set hard bounces to be automatically removed.

Secondly, it’s essential to monitor soft bounces. A soft bounce can happen if an inbox is full, or temporarily unavailable. You might not want to remove a soft bounce immediately. But if you’re getting a consistent soft bounce from an email address, then it’s probably worth removing it. A three-strike policy might be worth considering.

Last but not least, remove unengaged email subscribers. How long should you wait? While there’s no definitive answer, a good rule of thumb is that if someone hasn’t opened any of your emails for a year, they are probably not interested in your list anymore.

17. Allow your audience to unsubscribe easily

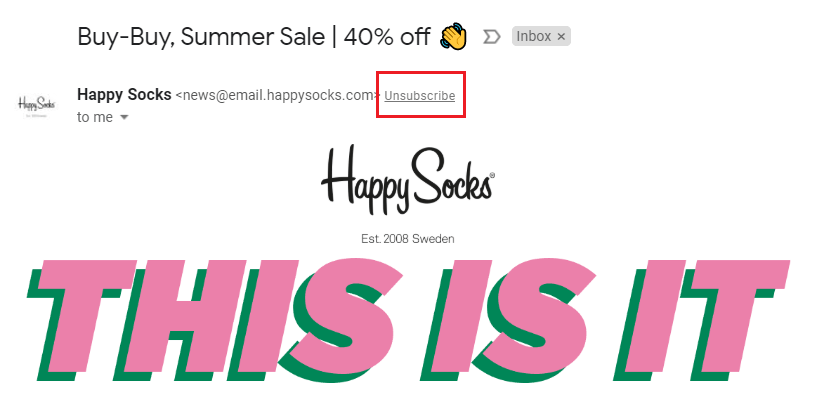

No matter how awesome your emails are, some users will want to unsubscribe. Perhaps they are no longer using your product, or maybe they had a career change. Unsubscribes are part of running a “clean” email list.

In numerous successful newsletter examples, the usual place for your unsubscribe button is next to your email address. Normally, it’s added automatically by the email client (Gmail, Yahoo, etc.).

While it may sound like an unorthodox email marketing best practice, unsubscribing must be effortless and instantaneous. Alternatively, you can add your button yourself at the end of your email design.

Avoid hiding it or using small fonts on purpose. And make sure users don’t need to log back into their account to complete the process. Failing to do so, will definitely trigger spam reports which in turn hurt your email deliverability rates.

At Moosend, we automatically add a one-click unsubscribe link to the bottom of every email to ensure you don’t forget about it!

18. Personalize your email campaigns

Email personalization is among the most basic email marketing best practices. When someone uses our name, we respond on a subconscious level.

In its simplest form, personalization happens when using your customer’s first name in your subject lines.

According to email automation statistics, 1.75% of email revenue comes from personalized email marketing campaigns! Personalization doesn’t end there, though.

Customers expect to receive relevant content that matches their taste. So, you need to leverage dynamic content and provide unique customer experiences, based on user data you’ve collected through your online forms.

In a nutshell, personalization leads to a significant increase in your engagement and conversion rates, so selecting a user-friendly and reliable email marketing tool can be a lifesaver.

19. Automate and personalize your messages

An email marketing best practice that couldn’t be absent from this list is automation. Modern email marketing platforms provide basic and advanced campaign automation options that marketers should leverage.

On a basic level, you need to create automated workflows that save you time from repetitive tasks. Such tasks are welcome email sequences, cart abandonment campaigns, etc.

You shouldn’t stop there, though. Combine the power of automation and personalization to form deeper bonds with your subscribers and increase engagement. This could be as simple as sending an email to wish them a happy birthday once a year. Be sure to accompany it with a discount voucher or exclusive offer for their special day!

Moosend provides you with all the subscription form templates you’ll need to collect those DOBs during sign-up. Think about how vital personal dates fit with your product or service and set up automated campaigns.

You can leverage all our automated workflows just by signing up for a free account!

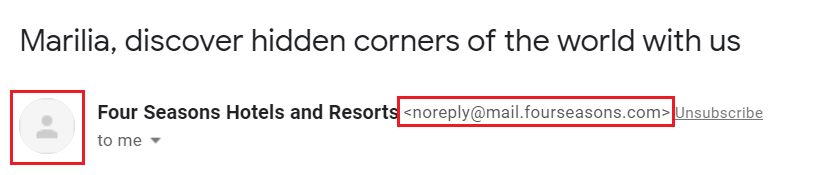

20. Avoid a ‘no-reply’ sender address and increase legitimacy

What’s the point in personalizing your email message or subject line if they look like they were sent by a bot? Or leave a blank BIMI record (brand logo)? It’s a little ironic.

Steer clear of a “no-reply@” sender address. You need to build trust with your audience, not the opposite. Moreover, they’re more likely to open your emails if they believe they are composed by a real human!

You may even choose to encourage your subscribers to reply, since collecting feedback is essential for your entire marketing plan.

Of course, there is also the CAN-SPAM Act that companies have to comply with. One of its rules dictates that you shouldn’t use the words “no-reply” or anything similar to your sender’s name.

It’s advisable to increase the legitimacy of your emails by setting up DMARC, which then allows you to use your brand logo on supporting email clients as part of BIMI. This is an additional layer of authenticity to your email messages, which establishes trust with your audience.

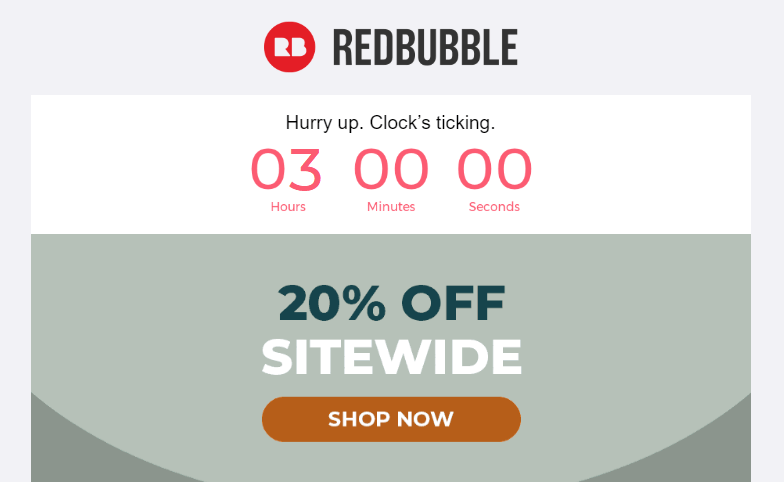

21. Make your emails time-sensitive

There’s nothing like a bit of urgency to spur consumers into taking action. Fear of missing out (FOMO) is a basic consumer instinct that every marketer should target.

So, set a time limit in which your customers can take action, and you’ll see an increase in clicks and conversion rates.

Begin by introducing urgency in your email subject lines.

- ⏳ Hours left: FREE Lipstick for National Lipstick Day! (MAC Cosmetics)

- ⏰ Hurry, Time is Running Out on These Hot Deals! (Nikon)

Prices Go Up Tomorrow

(MVMT)

Evidently, the combination of emojis and proper wording gives them an extra conversion boost.

Of course, if you want to skyrocket your campaigns’ efficiency, you shouldn’t ignore countdown timers in emails!

Timers are a feature that very few email service providers offer natively. Luckily for you, Moosend gives you all the time(rs) in the world plus useful email templates just by signing up for a free account!

Here is an example by Redbubble.

You can do it like Redbubble and better! Just select a template from our Template Library and drag-and-drop a “Timer” Element into it.

22. Remind subscribers why they signed up

There will be people on your list who have entirely forgotten ever signing up. So, it’s ideal to remind them WHY you’re emailing them. This is particularly important if you’re not a regular sender.

Let’s say you send a monthly newsletter to your list, but you emailed it on the last day of the month. Someone signs up on the 1st of January. By the time the 31st rolls around, they’ve completely forgotten all about you and your website. And they hit the spam button.

Even if you’re a regular sender, reminding people why they signed up is among the most important email marketing best practices you need!

The global brand Converse, for instance, reminds subscribers why they signed up at the bottom of their newsletter.

Why at the bottom? People will generally go at the bottom to look for an unsubscribe link, so by putting the reminder there, you can catch customers (and remind them) before they unsubscribe. This gives you a better chance at changing their mind and keeping them on our list.

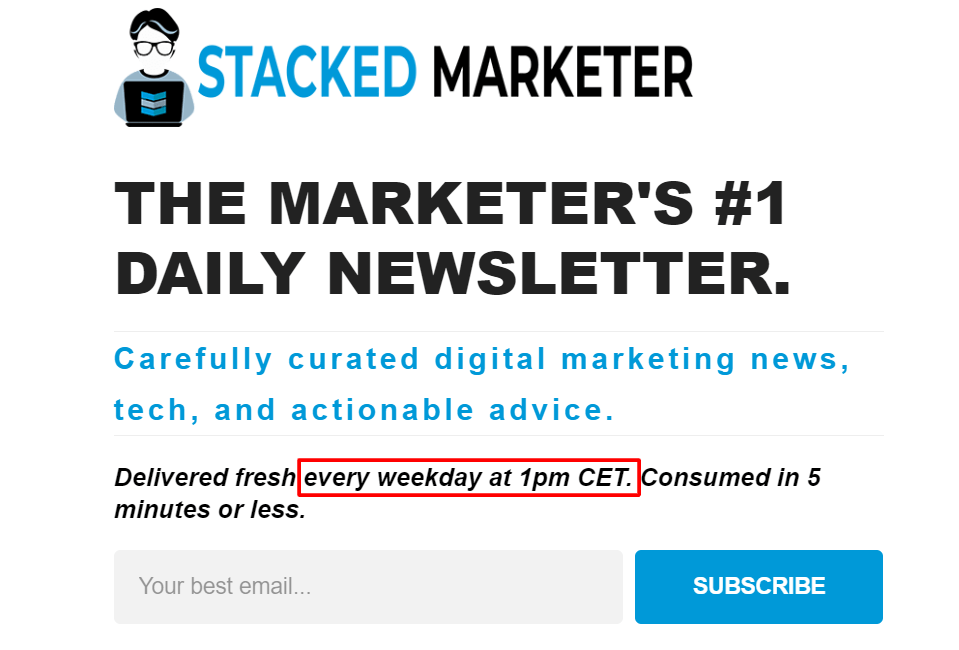

23. Make a promise from Day 1 (and keep it)

Creating an online form will help you indicate what a new subscriber should expect. Will they get a weekly newsletter? A ping when you publish a new blog post? Notification of special offers or discounts?

Whatever you promise when someone signs up to your newsletter, stick to that promise. After all, not doing so is the number one way to annoy your subscribers since you deliver something they haven’t opted for.

For example, Stacked Marketer has an email signup box on their website. There, they promise to send fresh content every day at 1 pm. And that’s exactly what they do.

24. Optimize your landing pages

Consumers nowadays are busy and expect to see certain things when they click on an email’s call to action. Therefore, creating a seamless transition for subscribers clicking on your emails is of the utmost importance.

Carefully crafting a landing page that is aligned with your goals and your email content shows consistency and promotes a positive user experience and trust in the brand.

It’s also very important to use tracking tools to measure which emails and landing pages had the best performance so that you can form a winning strategy.

If you’re curious how you can propel your landing page efficiency and boost conversions, check our landing page optimization tips!

25. Reward your loyal email subscribers

Loyal customers are the lifeblood of your business. They open and click your emails, and choose your store to make their purchases (instead of your competitors).

So reward them for their loyalty and engagement. There are various simple ways you can do this, from special discounts or offers to early access for exclusive products and services.

That’s a great way to make them feel appreciated. And you’re not losing anything, right? It’s a mutual benefit!

Some businesses also run reward points schemes for their customers. This is, of course, a great way to retain them and incentivize them to stay with you.

26. Integrate email marketing with social media

Social media. The holy land of memes, huge audiences, and Instagram influencer marketing!

Everyone has a social account to communicate with their friends. But most importantly, check news and interact with branded content. Since social media is such a big player, you need to integrate it with your email marketing.

And the way to do it? Well, take a look at Rothy’s email:

This email campaign is great to draw attention to your social media profiles. And the perfect social media marketing hack to expand your mailing list.

You can always add social media buttons at the bottom of your email and let your cross-promotional efforts run on autopilot.

What’s next?

We’ve covered a lot in this guide, so if you have any questions or comments specific to your campaigns, then please leave them below.

We’ll also be regularly updating with more email marketing best practices. If you liked what you read, you might want to bookmark this page or share it with your friends.

Email marketing shouldn’t be a hassle. If you still haven’t picked the right tools to make email marketing a piece of cake, make sure to try our email marketing platform.

What are you waiting for? Email away!

Prices Go Up Tomorrow

Prices Go Up Tomorrow

Published by

Published by