How Does ChatGPT Work? The Simplified Version [2024]

OpenAI’s ChatGPT is everywhere these days with super-companies like Microsoft already realizing its true potential for the future.

Having less time on our hands to do more, the software has come to provide instant answers simply by adding our questions in the chat box and pressing send.

But how does ChatGPT work? How does it know the answers and what are the limitations to the content it generates?

Find out in the post below:

- What is ChatGPT?

- How ChatGPT works

- ChatGPT applications

What Is ChatGPT?

ChatGPT is a computer program designed by OpenAI. It resembles a chatbot that can provide conversational responses to users.

But that’s the short version. To define ChatGPT we need to define GPT first.

GPT, which stands for Generative Pre-trained Transformer, is a family of neural networks that utilize transformer architecture to give applications the ability to create text, music, and images. These models are the highlight of artificial intelligence and are used to power up apps like ChatGPT and DALL-E.

Individuals and organizations across industries use GPT models and generative AI to power up AI chatbots and generate content, such as email marketing platforms offering users the option to generate email copy simply by adding a topic.

OpenAI has many models, including GPT, GPT-2, GPT-3, GPT-4, etc., and they all follow a deep learning model structure. Now, ChatGPT (accessed through chat.openai.com) is a GPT model based on Transformer architecture used for generating responses. Essentially, it’s a version of the GPT model that has been optimized and fine-tuned for conversation-like interactions.

GPT-3.5 vs. GPT-4

As of today, OpenAI gives you access to two versions. The GPT-3.5 version of ChatGPT is available for free and plus users and is great for generating responses for everyday tasks.

GPT-4, on the other hand, is only available for ChatGPT Plus users and it offers more creativity and advanced reasoning (with a cap of 50 messages every 3 hours).

While there aren’t a lot of specific information released by OpenAI, the main ones between the two versions include:

| GPT-3.5 | GPT-4 | |

| Parameters | 175 billion (GPT-3) | Almost 100 trillion |

| Memory | Short-term – 8,000 words | Short-term – 64,000 words |

| Multimodal Model | Text only | Text and image processing |

| Personalization | Available | Available – more creativity |

| Training capacity | Up to September 2021 | Up to December 2023 |

| Plugins | Unavailable | Available |

| Multilingual support | Yes | Yes |

| Web page searches | No | Yes (through plugins) |

Using ChatGPT is a great way to get quick AI-generated responses. However, one should be aware that it is a software application trained on specific data sets so its capabilities are limited and replies rely on patterns found on the trained data.

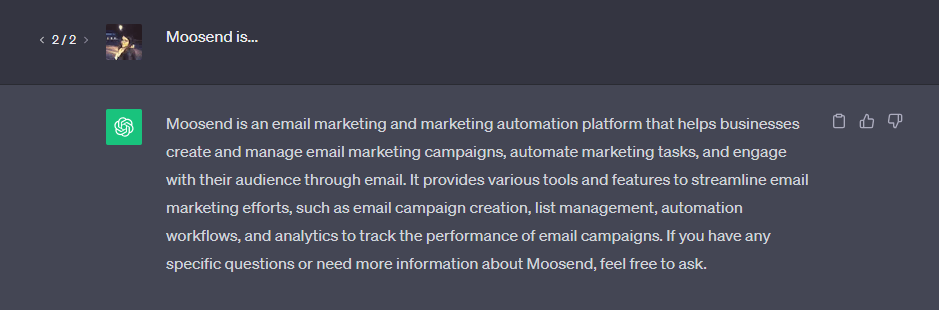

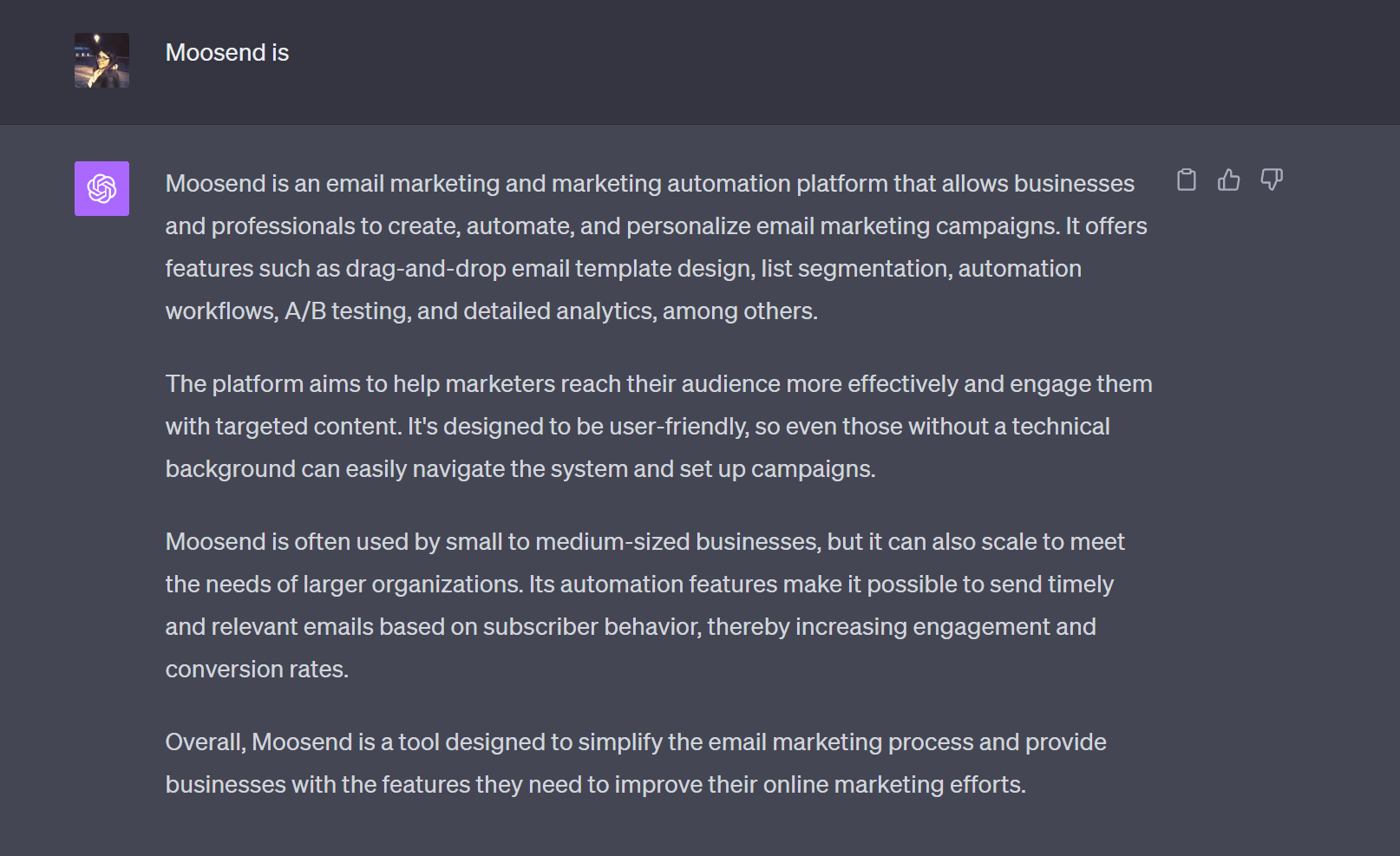

To better understand the difference between GPT-3.5 and GPT-4, we generated two responses.

Here’s the GPT-3.5 answer to our query:

And here’s the GPT-4 version:

As you can see, this generated response is longer and more intuitive than the GPT-3.5

Beta Features

As ChatGPT evolves, OpenAI is constantly developing new features to make it one of the most powerful and versatile AI tools that will compete with Google’s Bard, and so on.

Currently, ChatGPT Plus users can:

- Browse with Bing: a version of the tool that can browse the web to get information on recent topics and events.

- Use plugins: Expedia, FiscalNote, Instacart, KAYAK, Klarna, Milo, OpenTable, Shopify, Slack, Speak, Wolfram, and Zapier.

- Advanced data analysis: a version that can write and execute Python code to analyze and optimize data.

Now that we covered the above, let’s see how ChatGPT works by analyzing the components mentioned above.

How Does ChatGPT Work?

As mentioned, ChatGPT is a state-of-the-art language model that combines Transformer architecture, pre-training on text data, fine-tuning, and natural language understanding to generate contextually relevant and safe responses in natural language conversations.

Now, let’s see all the components and steps needed to go from “X?” to “Y.”

Architecture

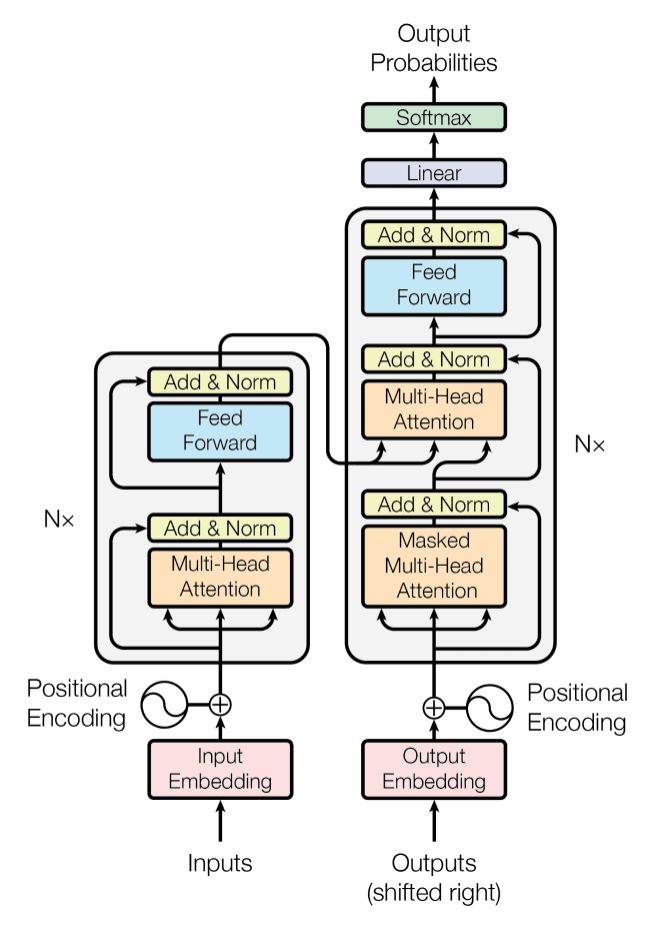

The core of GPT is the Transformer architecture, a type of neural network architecture introduced in 2017 in the paper “Attention Is All You Need” by Vaswani et al, notable for its self-attention mechanism.

Nowadays, this form of architecture is used by many natural language processing (NLP) and machine learning tasks. Its initial goal was to enhance translation, but now it’s widely used for text generation, summarization, and much more.

Compared to recent neural networks (RNNs) or long short-term memory networks (LSTMs), Transformer Architecture doesn’t process data sequentially. Instead, it processes all data points in parallel, which enables faster processing and training.

Below are the main components of the Transformer:

- Encoder-Decoder Structure: Used to process the input data while the Decoder generates the output. Each is made up of multiple identical layers.

- Attention Mechanisms: The innovative self-attention mechanism allows the model to consider multiple parameters.

- Positional Encoding: Since word processing is parallel there’s no order. Positional encodings are added to the word embeddings to establish contextual information.

- Layer Normalization: For better training, normalization techniques are applied to stabilize the network.

- Feed-forward Neural Networks: Each attention layer is followed by feed-forward neural networks applied independently to each position.

- Scalability: Due to its scalability, the Transformer performs well on large and complex datasets.

ChatGPT is based on the above Transformer Architecture. In the case of GPT-4, the model has multiple layers of decoders layered on top of each other. Each layer consists of a self-attention mechanism followed by a feed-forward neural network.

What you need to remember here is that ChatGPT doesn’t use the encoder of the original Transformer architecture, employing only the decoder part.

Technology

The actual computations are carried out using deep learning libraries such as TensorFlow or PyTorch.

The model, especially large versions like GPT-3 or GPT-4, requires significant computational resources, often running on specialized hardware like GPUs or TPUs.

In essence, ChatGPT and its underlying GPT model utilize the power of the Transformer Architecture, massive amounts of data, and deep learning techniques to produce human-like text based on the patterns it has learned from its training data.

Pre-Training

When it comes to the training process of models like ChatGPT, the training is supervised rather than reinforcement-based. This means they are trained on a large dataset with the goal of predicting the next word in a sequence. This large corpus of text data can include books, articles, and websites, such as Wikipedia, etc.

The goal of the training process is to assimilate grammar, syntax, world facts, reasoning abilities (at a superficial level), and even some biases present in the data.

To better understand its limitations, it’s good to know that the model does not have knowledge of the specific data in its training set. Moreover, it can’t access or retrieve any confidential, classified, or copyrighted information for obvious reasons.

So, in a nutshell, pre-training is based only on publicly available materials. This helps ChatGPT create a general-purpose language model that understands the structure of a user’s query and provides the most suitable response based on the patterns it has been trained on.

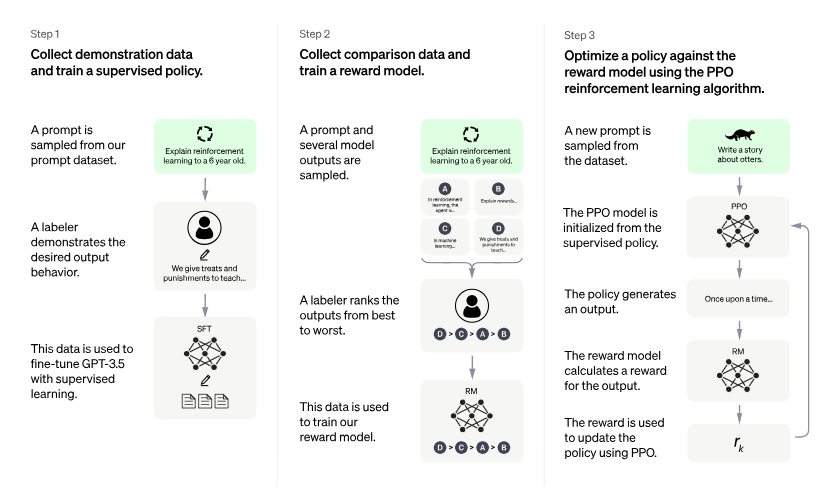

Fine-Tuning

The second part of training includes a process called fine-tuning. The purpose is to further train the model on specific datasets or tasks to enhance the accuracy and relevancy of the responses.

For example, fine-tuning helps the model to better understand and follow instructions, generate more coherent text, and avoid generating harmful or inappropriate content, which is prohibited in ChatGPT.

According to OpenAI, ChatGPT is fine-tuned from a model in the GPT-3.5 series, trained in January 2022. Moreover, ChatGPT and GPT-3.5 were trained on an Azure AI supercomputing infrastructure.

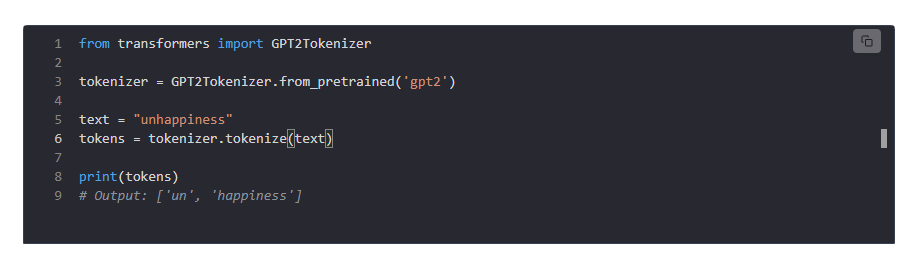

Tokenization

In this phase, the input text is broken down into chunks called tokens, which can be as short as one character or as long as whole English words, subwords, or even individual characters, depending on the language and context.

Each token is represented as a vector using embeddings. These embeddings are numerical representations of words or subwords and capture semantic meanings and relationships.

According to OpenAI, both versions use a tokenization process to convert input text into a sequence of tokens, which are then fed into the Transformer architecture.

Specifically, GPT-3 tokenization uses a Byte Pair Encoding (BPE) algorithm or a similar method to break down text into manageable tokens. Then, the tokenized text is processed by the neural network using multiple layers of Transformer decoders.

While OpenAI hasn’t publicly released more detailed information on the tokenization process, GPT-4 is better in certain aspects, including the model size and training data.

Model Depth

GPT models are deep, meaning they have many layers of the Transformer with each layer capturing different types of information and patterns from the input.

The more data moves through these layers, the more the model assimilates it, resulting in refining its understanding and representation of the input.

To get a better understanding of the model depth, GPT-2 had somewhere between 117 million parameters with 12 layers to 1.5 billion parameters with 48 layers. GPT-3 increased to about 175 billion machine learning parameters.

GPT-4 has now about 1.76 trillion parameters and it employs deep learning techniques that are far more advanced than GPT-3. This means that this model can process approximately 25,000 words in a single query, which is significantly higher than its predecessor.

Generating Responses

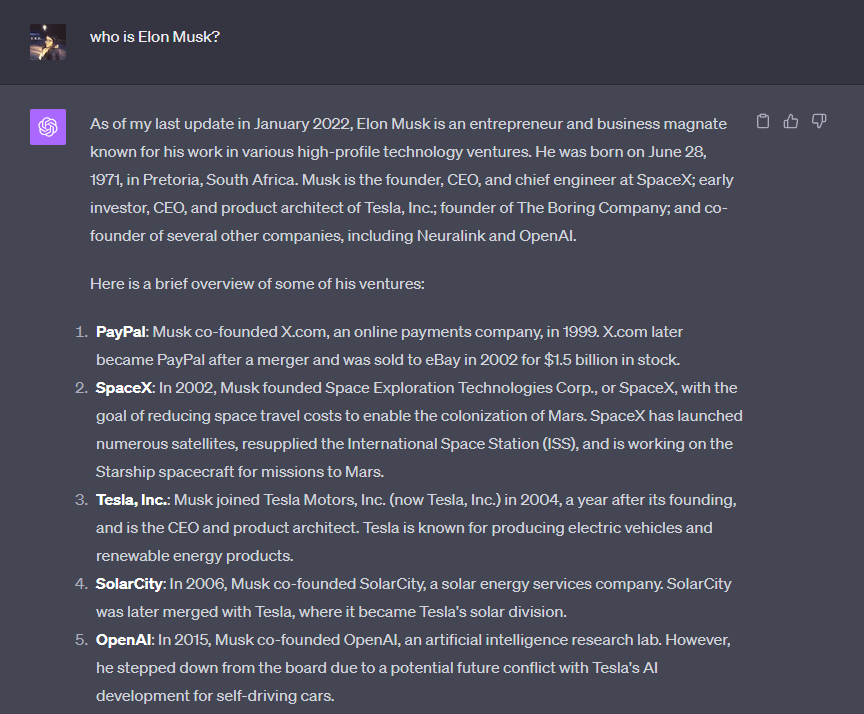

To get an AI-generated response, a series of tokens need to be added as input. Then, the model generates a sequence of tokens as output, which can be translated back into human-readable text.

It’s good to know that the model doesn’t have the capacity for human understanding and reasoning. Instead, it relies on identifying patterns in the dataset it was trained on and uses those patterns to generate answers.

The output can be influenced based on different techniques, such as temperature settings or prompt engineering.

To get a response to your ChatGPT prompt or query, you just need to write it in the chat and hit send. Then, based on the trained data it has, it’ll provide you with an answer.

For example, that’s the response to our query: “Who is Elon Musk?”

As you can see, the model only lists Elon Musk’s ventures excluding Twitter’s (now X) acquisition in October 2022 as the dataset didn’t include the information.

Knowledge Limitations

As we mentioned earlier, ChatGPT doesn’t have human understanding but rather relies on pattern recognition to generate responses.

This means that the model doesn’t remember past interactions due to privacy considerations and it’s not aware of events or advancements after its last training data update, which is January 2022 for GPT-4.

More specifically, OpenAI lists the limitations of ChatGPT on its website. In a nutshell:

- It may provide plausible-sounding but incorrect or nonsensical answers.

- Based on the phrasing, ChatGPT may claim not to know a query but it may provide a response if the prompt is altered. This makes the model phrasing-sensitive.

- The model can be excessively verbose, overusing certain phrases.

- ChatGPT only guesses user-intention since it can’t ask clarifying questions.

- The model will sometimes respond to inappropriate prompts or show biased behavior despite OpenAI’s use of the Moderation API to block such content.

Biases

OpenAI has informed users of the model’s potentially biased behavior.

Since it’s trained on large public datasets found on the internet, GPT can sometimes reflect biases present in them when generating text. Thus, users are advised to check the validity of ChatGPT responses to avoid misinformation.

Moreover, regarding plagiarism, the goal of the model is to provide original and relevant responses. However, there’s always a possibility that the generated text could resemble existing content due to the large datasets it’s been trained on.

The thing is, if you intend to use the app for academic purposes, you should reconsider as you may come across certain issues.

ChatGPT Applications

While ChatGPT is designed for conversational AI, the GPT architecture can be used in various NLP tasks, from translation to Q&A, AI assistants, and more.

Below, you will find some further applications powered by this new technology.

- Content generation: GPT-4 can be used to create written content, including articles, blog posts, and prompts, from creative writing to more technical pieces.

- Email and text Automation: It helps you compose emails based on specific criteria you feed on it.

- Programming: ChatGPT can generate code, and help debug issues at a basic level. Also, Plus users can upload images on it and ask ChatGPT to create code based on them.

- Data interpretation: the tool can interpret data or describe the steps to carry out a data analysis task.

- Language translation: Basic translation is also possible as more languages are added.

- Tutoring: ChatGPT can assist in explaining complex topics, ranging from science to history to mathematics. It is increasingly being used in educational technology applications.

- Search engines: It can be used to improve the natural language understanding capabilities of search engines, making them more responsive to user queries.

- Virtual assistants: While ChatGPT is not Siri or Alexa, future versions could offer more detailed and personalized responses.

- Summarization: The model can help in summarizing large blocks of text to save time.

These are just a few of the real-world applications of the GPT model. Fascinating, right?

ChatGPT Even More Simplified

If GPT is all Greek to you, below you’ll find the simplest explanation of how it works.

Now, let’s imagine ChatGPT as a super-smart parrot whose owner has given it access to a lot of books, websites, and messages.

This parrot doesn’t really understand what it’s saying, but it’s very good at mimicking human speech and responding in ways that usually make sense. So, when its owner asks, “Hey Chatter, how are you?” the parrot, after hearing the standard response to this question repeatedly, will simply reply, “I’m good.”

Here’s what it needs to do to give you answers to your questions:

- Mimicking: Chatter has listened to millions of conversations to learn how people talk. It knows which words usually come after other words or phrases. For example, after hearing “How are you?” it learns that “I’m good” is a common response.

- Your Turn: Now, you need to ask questions or make statements. The parrot doesn’t “understand” you like a human would, but based on all the conversations it has listened to it can predict your query.

- Parrot Responds: After the training process, Chatter will choose the responses that fit best and reply, mimicking a real-world conversation. Remember, repetition is the mother of learning.

- No Memory: Just like a parrot, ChatGPT doesn’t remember past conversations. Each time you talk to it, it’s like starting anew.

- Not Always Right: Sometimes the parrot might say something that doesn’t make sense or is incorrect. Some other times, it may not understand your phrasing at first but changing it may help it get it in the end. Remember, Chatter is just mimicking speech based on patterns; it doesn’t actually “know” things but it’s good at reading the patterns.

And now you have a clear idea of how ChatGPT works!

Understanding How ChatGPT Works

ChatGPT has become a very popular tool not only for individuals but businesses who want to benefit from the capabilities of AI.

Leveraging the power of the GPT architecture and the Transformer model, ChatGPT provides a deep understanding of human language, allowing it to generate text based on the provided context.

You can start using ChatGPT without any prior knowledge of the tool. All you need is a question and you are ready to go.

Frequently Asked Questions (FAQs)

Simple answers to complex questions.

1. Who is the CEO of OpenAI?

The CEO of OpenAI was Sam Altman. He took on the role in March 2019, succeeding Elon Musk.

2. What is Reinforcement learning from human feedback (RLHF)?

Reinforcement learning from human feedback (RLHF) is a machine learning approach that combines reinforcement learning (RL) with human feedback to train models.

3. What is a Large Language Model (LLM)?

A Large Language Model (LLM) refers to a machine learning model designed to understand and generate human-like text based on the input it receives. These models are “large” in terms of the number of parameters they have (billions or trillions).

4. Does ChatGPT have a reward model?

ChatGPT doesn’t use a reward model in the sense commonly associated with reinforcement learning. The training process relies on supervised learning, meaning it’s trained to predict the next word in a sequence of words based on a large dataset of human-generated text.

5. Is ChatGPT an AI chatbot?

Yes, ChatGPT is a type of AI chatbot designed to interact with users via natural language, answering questions, engaging in conversation, and performing various tasks that involve language comprehension and generation.

Published by

Published by

Published by

Published by